Unveiling AI Risks in Self-Driving Cars (PDF)

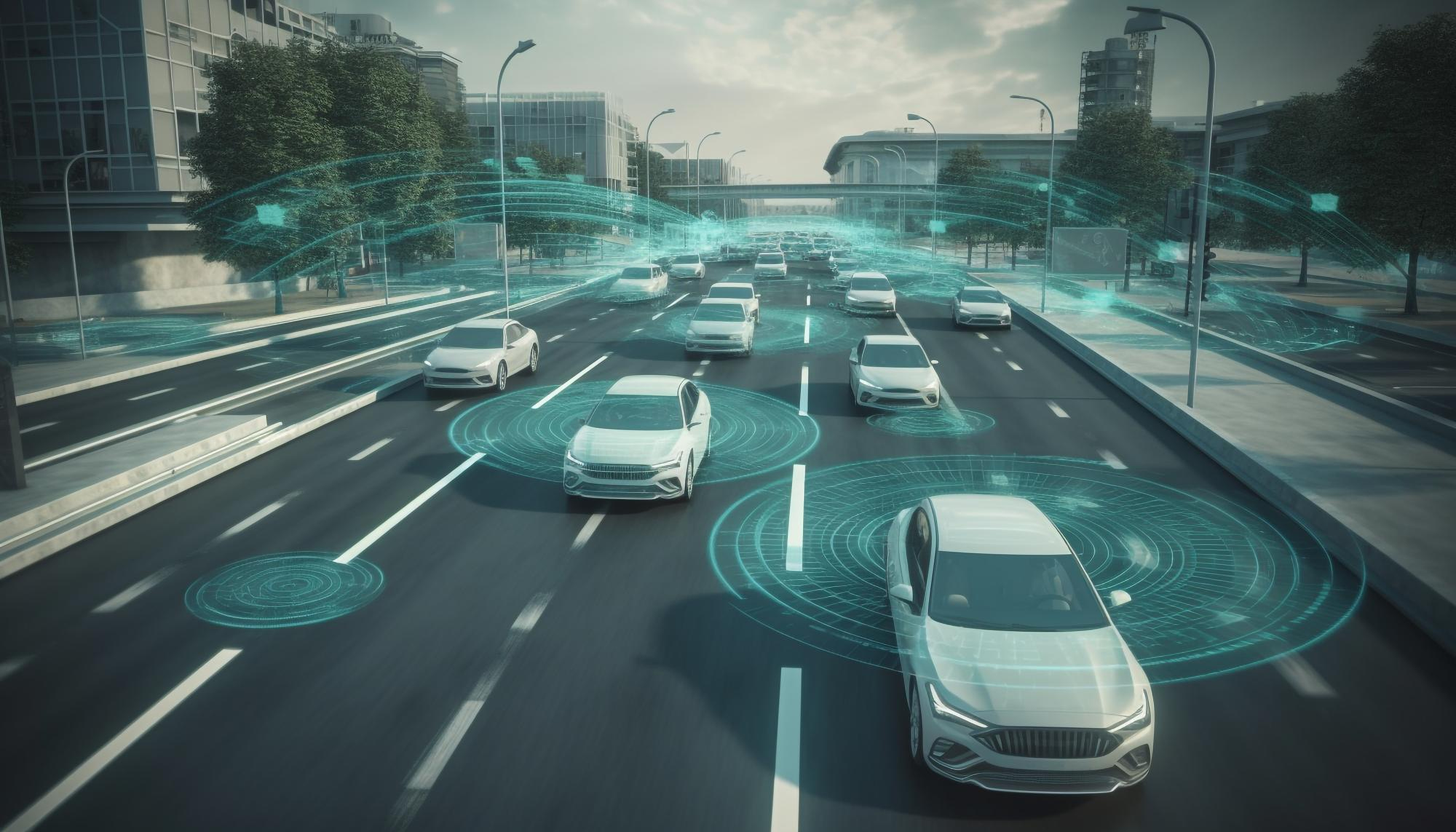

The surge of self-driving cars has given rise to numerous debates regarding the impacts of artificial intelligence (AI) on our day-to-day lives. Having collaborated closely with the U.S. National Highway Traffic Safety Administration (NHTSA), I was granted exclusive insight into the domain of automotive AI and its potential hazards. This blog post reveals five key findings from my experiences, providing a better grasp of the obstacles that need to be addressed when regulating and becoming more knowledgeable about AI in terms of self-driving vehicles.

Human Errors in Operation Get Replaced by Human Errors in Coding

Proponents of autonomous vehicles frequently point to safety as a major advantage of eliminating human drivers. However, this overlooks the fact that issues can arise in the development of software and coding for these AI systems. Recent crashes involving self-driving cars are evidence of how latent errors or faulty software programming can have dangerous consequences. To guard against such risks, proper testing and regular updates are needed, along with government intervention to ensure companies don’t hastily release products before they’ve been properly evaluated.

AI Failure Modes Are Hard to Predict

Although self-driving cars and large language models (LLMs) rely on statistical reasoning to approximate their decisions, the real world is too unpredictable to be fully modeled. This is exemplified in phantom braking incidents, which are caused when the AI is unable to understand an unforeseen situation. As AI technology continues to progress in various sectors, developing robust methods for managing unexpected failures remains a great challenge.

Lack of Judgment in the Face of Uncertainty

The Importance of Maintaining AI

AI models need current and applicable data in order to function correctly. As new items, like vehicles and traffic regulations, come into existence, this necessitates making frequent changes to the AI models to prevent “model drift.” It is up to governments and organizations to recognize the importance of preserving AI systems and plan ahead for regular updating and maintenance.

System-Level Implications of AI

Self-driving cars have safety features that bring the vehicle to a stop when faced with uncertainty. However, managing these stops can create unforeseen challenges, such as blocking roads and causing traffic jams. Moreover, reliance on wireless connectivity can lead to unexpected problems when connections are lost. The system-level implications of AI require careful consideration, as mishandling such challenges could lead to public distrust and backlash against the technology.

The Need for Educated Regulation

To address these risks and effectively regulate AI, the government must prioritize technical competence in AI across all relevant agencies. Recruiting and empowering experts who understand the strengths and weaknesses of AI is essential for balanced policymaking. Collaboration between academia, industry, and the government can help bridge the knowledge gap and ensure that discussions about AI regulation are grounded in technical expertise rather than industry interests or unfounded fears.

Navigating the Road Ahead

AI, especially in the realm of self-driving cars, holds tremendous promise but also comes with inherent risks. Understanding the complexities and challenges of AI is critical in crafting informed regulations that prioritize safety and innovation. By investing in education, improving government expertise, and fostering constructive dialogues, we can navigate the future of AI responsibly, driving technology forward while safeguarding the well-being of society. By embracing knowledge and informed decision-making, we can embrace the potential of AI while taming its potential risks.